About the CoMSES Model Library more info

Our mission is to help computational modelers develop, document, and share their computational models in accordance with community standards and good open science and software engineering practices. Model authors can publish their model source code in the Computational Model Library with narrative documentation as well as metadata that supports open science and emerging norms that facilitate software citation, computational reproducibility / frictionless reuse, and interoperability. Model authors can also request private peer review of their computational models. Models that pass peer review receive a DOI once published.

All users of models published in the library must cite model authors when they use and benefit from their code.

Please check out our model publishing tutorial and feel free to contact us if you have any questions or concerns about publishing your model(s) in the Computational Model Library.

We also maintain a curated database of over 7500 publications of agent-based and individual based models with detailed metadata on availability of code and bibliometric information on the landscape of ABM/IBM publications that we welcome you to explore.

Displaying 10 of 342 results for "Tim Dorscheidt" clear search

The Evolution of Multiple Resistant Strains: An Abstract Model of Systemic Treatment and Accumulated Resistance

Benjamin Nye | Published Wednesday, August 31, 2011 | Last modified Saturday, April 27, 2013This model is intended to explore the effectiveness of different courses of interventions on an abstract population of infections. Illustrative findings highlight the importance of the mechanisms for variability and mutation on the effectiveness of different interventions.

An Agent-Based Model of Collective Action

Hai-Hua Hu | Published Tuesday, August 20, 2013We provide an agent-based model of collective action, informed by Granovetter (1978) and its replication model by Siegel (2009). We use the model to examine the role of ICTs in collective action under different cultural and political contexts.

OMOLAND-CA: An Agent-Based Modeling of Rural Households’ Adaptation to Climate Change

Atesmachew Hailegiorgis Andrew Crooks Claudio Cioffi-Revilla | Published Tuesday, July 25, 2017 | Last modified Tuesday, July 10, 2018The purpose of the OMOLAND-CA is to investigate the adaptive capacity of rural households in the South Omo zone of Ethiopia with respect to variation in climate, socioeconomic factors, and land-use at the local level.

DiDIY Factory

Ruth Meyer | Published Tuesday, February 20, 2018The DiDIY-Factory model is a model of an abstract factory. Its purpose is to investigate the impact Digital Do-It-Yourself (DiDIY) could have on the domain of work and organisation.

DiDIY can be defined as the set of all manufacturing activities (and mindsets) that are made possible by digital technologies. The availability and ease of use of digital technologies together with easily accessible shared knowledge may allow anyone to carry out activities that were previously only performed by experts and professionals. In the context of work and organisations, the DiDIY effect shakes organisational roles by such disintermediation of experts. It allows workers to overcome the traditionally strict organisational hierarchies by having direct access to relevant information, e.g. the status of machines via real-time information systems implemented in the factory.

A simulation model of this general scenario needs to represent a more or less abstract manufacturing firm with supervisors, workers, machines and tasks to be performed. Experiments with such a model can then be run to investigate the organisational structure –- changing from a strict hierarchy to a self-organised, seemingly anarchic organisation.

Crowdworking Model

Georg Jäger | Published Wednesday, September 25, 2019The purpose of this agent-based model is to compare different variants of crowdworking in a general way, so that the obtained results are independent of specific details of the crowdworking platform. It features many adjustable parameters that can be used to calibrate the model to empirical data, but also when not calibrated it yields essential results about crowdworking in general.

Agents compete for contracts on a virtual crowdworking platform. Each agent is defined by various properties like qualification and income expectation. Agents that are unable to turn a profit have a chance to quit the crowdworking platform and new crowdworkers can replace them. Thus the model has features of an evolutionary process, filtering out the ill suited agents, and generating a realistic distribution of agents from an initially random one. To simulate a stable system, the amount of contracts issued per day can be set constant, as well as the number of crowdworkers. If one is interested in a dynamically changing platform, the simulation can also be initialized in a way that increases or decreases the number of crowdworkers or number of contracts over time. Thus, a large variety of scenarios can be investigated.

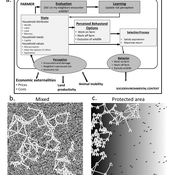

Wildlife-Human Interactions in Shared Landscapes (WHISL)

Nicholas Magliocca Neil Carter Andres Baeza-Castro | Published Friday, May 22, 2020This model simulates a group of farmers that have encounters with individuals of a wildlife population. Each farmer owns a set of cells that represent their farm. Each farmer must decide what cells inside their farm will be used to produce an agricultural good that is self in an external market at a given price. The farmer must decide to protect the farm from potential encounters with individuals of the wildlife population. This decision in the model is called “fencing”. Each time that a cell is fenced, the chances of a wildlife individual to move to that cell is reduced. Each encounter reduces the productive outcome obtained of the affected cell. Farmers, therefore, can reduce the risk of encounters by exclusion. The decision of excluding wildlife is made considering the perception of risk of encounters. In the model, the perception of risk is subjective, as it depends on past encounters and on the perception of risk from other farmers in the community. The community of farmers passes information about this risk perception through a social network. The user (observer) of the model can control the importance of the social network on the individual perception of risk.

Peer reviewed Credit and debt market of low-income families

Márton Gosztonyi | Published Tuesday, December 12, 2023 | Last modified Friday, January 19, 2024The purpose of the Credit and debt market of low-income families model is to help the user examine how the financial market of low-income families works.

The model is calibrated based on real-time data which was collected in a small disadvantaged village in Hungary it contains 159 households’ social network and attributes data.

The simulation models the households’ money liquidity, expenses and revenue structures as well as the formal and informal loan institutions based on their network connections. The model forms an intertwined system integrated in the families’ local socioeconomic context through which families handle financial crises and overcome their livelihood challenges from one month to another.

The simulation-based on the abstract model of low-income families’ financial survival system at the bottom of the pyramid, which was described in following the papers:

…

Peer reviewed Dynamic Value-based Cognitive Architectures

Bart de Bruin | Published Tuesday, November 30, 2021The intention of this model is to create an universal basis on how to model change in value prioritizations within social simulation. This model illustrates the designing of heterogeneous populations within agent-based social simulations by equipping agents with Dynamic Value-based Cognitive Architectures (DVCA-model). The DVCA-model uses the psychological theories on values by Schwartz (2012) and character traits by McCrae and Costa (2008) to create an unique trait- and value prioritization system for each individual. Furthermore, the DVCA-model simulates the impact of both social persuasion and life-events (e.g. information, experience) on the value systems of individuals by introducing the innovative concept of perception thermometers. Perception thermometers, controlled by the character traits, operate as buffers between the internal value prioritizations of agents and their external interactions. By introducing the concept of perception thermometers, the DVCA-model allows to study the dynamics of individual value prioritizations under a variety of internal and external perturbations over extensive time periods. Possible applications are the use of the DVCA-model within artificial sociality, opinion dynamics, social learning modelling, behavior selection algorithms and social-economic modelling.

LogoClim: WorldClim in NetLogo

Daniel Vartanian Leandro Garcia Aline Martins de Carvalho Aline | Published Thursday, July 03, 2025 | Last modified Tuesday, September 16, 2025LogoClim is a NetLogo model for simulating and visualizing global climate conditions. It allows researchers to integrate high-resolution climate data into agent-based models, supporting reproducible research in ecology, agriculture, environmental sciences, and other fields that rely on climate data.

The model utilizes raster data to represent climate variables such as temperature and precipitation over time. It incorporates historical data (1951-2024) and future climate projections (2021-2100) derived from global climate models under various Shared Socioeconomic Pathways (SSPs, O’Neill et al., 2017). All climate inputs come from WorldClim 2.1, a widely used source of high-resolution, interpolated climate datasets based on weather station observations worldwide (Fick & Hijmans, 2017).

LogoClim follows the FAIR Principles for Research Software (Barker et al., 2022) and is openly available on the CoMSES Network and GitHub. See the Logônia model for an example of its integration into a full NetLogo simulation.

Knowledge Based Economy

Guido Fioretti Sirio Capizzi Ruggero Rossi Martina Casari Ala Jlif | Published Tuesday, May 18, 2021Knowledge Based Economy (KBE) is an artificial economy where firms placed in geographical space develop original knowledge, imitate one another and eventually recombine pieces of knowledge. In KBE, consumer value arises from the capability of certain pieces of knowledge to bridge between existing items (e.g., Steve Jobs illustrated the first smartphone explaining that you could make a call with it, but also listen to music and navigate the Internet). Since KBE includes a mechanism for the generation of value, it works without utility functions and does not need to model market exchanges.

Displaying 10 of 342 results for "Tim Dorscheidt" clear search