About the CoMSES Model Library more info

Our mission is to help computational modelers at all levels engage in the establishment and adoption of community standards and good practices for developing and sharing computational models. Model authors can freely publish their model source code in the Computational Model Library alongside narrative documentation, open science metadata, and other emerging open science norms that facilitate software citation, reproducibility, interoperability, and reuse. Model authors can also request peer review of their computational models to receive a DOI.

All users of models published in the library must cite model authors when they use and benefit from their code.

Please check out our model publishing tutorial and contact us if you have any questions or concerns about publishing your model(s) in the Computational Model Library.

We also maintain a curated database of over 7500 publications of agent-based and individual based models with additional detailed metadata on availability of code and bibliometric information on the landscape of ABM/IBM publications that we welcome you to explore.

Displaying 4 of 4 results artificial intelligence clear search

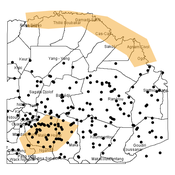

Agent-based modeling of the spatio-temporal distribution of Sahelian transhumant herds

Cheick Amed Diloma Gabriel TRAORE Etienne DELAY Alassane Bah Djibril Diop | Published Tuesday, May 20, 2025Sahelian transhumance is a seasonal pastoral mobility between the transhumant’s terroir of origin and one or more host terroirs. Sahelian transhumance can last several months and extend over hundreds of kilometers. Its purpose is to ensure efficient and inexpensive feeding of the herd’s ruminants. This paper describes an agent-based model to determine the spatio-temporal distribution of Sahelian transhumant herds and their impact on vegetation. Three scenarios based on different values of rainfall and the proportion of vegetation that can be grazed by transhumant herds are simulated. The results of the simulations show that the impact of Sahelian transhumant herds on vegetation is not significant and that rainfall does not impact the alley phase of transhumance. The beginning of the rainy season has a strong temporal impact on the spatial distribution of transhumant herds during the return phase of transhumance.

Agent-Based Model for Analyzing the Impact of Movement Factors of Sahelian Transhumant Herds

Cheick Amed Diloma Gabriel TRAORE | Published Tuesday, May 28, 2024Transhumants move their herds based on strategies simultaneously considering several environmental and socio-economic factors. There is no agreement on the influence of each factor in these strategies. In addition, there is a discussion about the social aspect of transhumance and how to manage pastoral space. In this context, agent-based modeling can analyze herd movements according to the strategy based on factors favored by the transhumant. This article presents a reductionist agent-based model that simulates herd movements based on a single factor. Model simulations based on algorithms to formalize the behavioral dynamics of transhumants through their strategies. The model results establish that vegetation, water outlets and the socio-economic network of transhumants have a significant temporal impact on transhumance. Water outlets and the socio-economic network have a significant spatial impact. The significant impact of the socio-economic factor demonstrates the social dimension of Sahelian transhumance. Veterinarians and markets have an insignificant spatio-temporal impact. To manage pastoral space, water outlets should be at least 15 km

from each other. The construction of veterinary centers, markets and the securitization of transhumance should be carried out close to villages and rangelands.

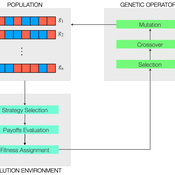

Peer reviewed Evolutionary Economic Learning Simulation: A Genetic Algorithm for Dynamic 2x2 Strategic-Form Games in Python

Vinicius Ferraz Thomas Pitz | Published Friday, April 08, 2022This project combines game theory and genetic algorithms in a simulation model for evolutionary learning and strategic behavior. It is often observed in the real world that strategic scenarios change over time, and deciding agents need to adapt to new information and environmental structures. Yet, game theory models often focus on static games, even for dynamic and temporal analyses. This simulation model introduces a heuristic procedure that enables these changes in strategic scenarios with Genetic Algorithms. Using normalized 2x2 strategic-form games as input, computational agents can interact and make decisions using three pre-defined decision rules: Nash Equilibrium, Hurwicz Rule, and Random. The games then are allowed to change over time as a function of the agent’s behavior through crossover and mutation. As a result, strategic behavior can be modeled in several simulated scenarios, and their impacts and outcomes can be analyzed, potentially transforming conflictual situations into harmony.

This model presents an autonomous, two-lane driving environment with a single lane-closure that can be toggled. The four driving scenarios - two baseline cases (based on the real-world) and two experimental setups - are as follows:

- Baseline-1 is where cars are not informed of the lane closure.

- Baseline-2 is where a Red Zone is marked wherein cars are informed of the lane closure ahead.

- Strategy-1 is where cars use a co-operative driving strategy - FAS. <sup>[1]</sup>

- Strategy-2 is a variant of Strategy-1 and uses comfortable deceleration values instead of the vehicle’s limit.

…