Cliff Walking with Q-Learning NetLogo Extension (1.0.0)

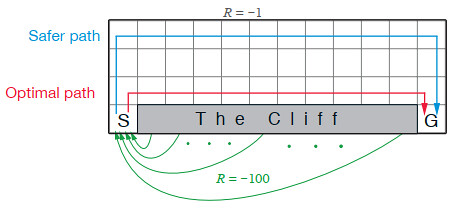

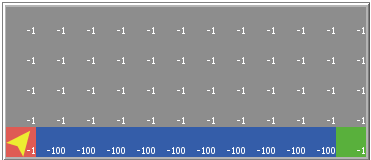

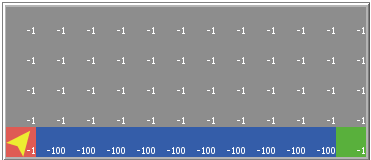

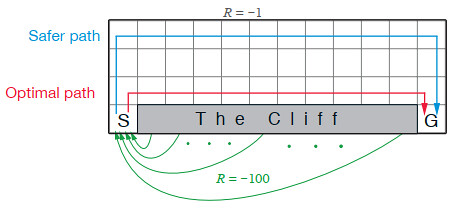

This model implements a classic scenario used in Reinforcement Learning problem, the “Cliff Walking Problem”. Consider the gridworld shown below (SUTTON; BARTO, 2018). This is a standard undiscounted, episodic task, with start and goal states, and the usual actions causing movement up, down, right, and left. Reward is -1 on all transitions except those into the region marked “The Cliff.” Stepping into this region incurs a reward of -100 and sends the agent instantly back to the start (SUTTON; BARTO, 2018).

The problem is solved in this model using the Q-Learning algorithm. The algorithm is implemented with the support of the NetLogo Q-Learning Extension

Release Notes

To use this model you just have to open it with NetLogo 6.1.0. You also should add the Q-Learning Extension to your NetLogo, this can be done through the NetLogo Extension Manager.

Associated Publications

Cliff Walking with Q-Learning NetLogo Extension 1.0.0

Submitted by

Kevin Kons

Published Dec 10, 2019

Last modified Dec 19, 2019

This model implements a classic scenario used in Reinforcement Learning problem, the “Cliff Walking Problem”. Consider the gridworld shown below (SUTTON; BARTO, 2018). This is a standard undiscounted, episodic task, with start and goal states, and the usual actions causing movement up, down, right, and left. Reward is -1 on all transitions except those into the region marked “The Cliff.” Stepping into this region incurs a reward of -100 and sends the agent instantly back to the start (SUTTON; BARTO, 2018).

The problem is solved in this model using the Q-Learning algorithm. The algorithm is implemented with the support of the NetLogo Q-Learning Extension

Release Notes

To use this model you just have to open it with NetLogo 6.1.0. You also should add the Q-Learning Extension to your NetLogo, this can be done through the NetLogo Extension Manager.